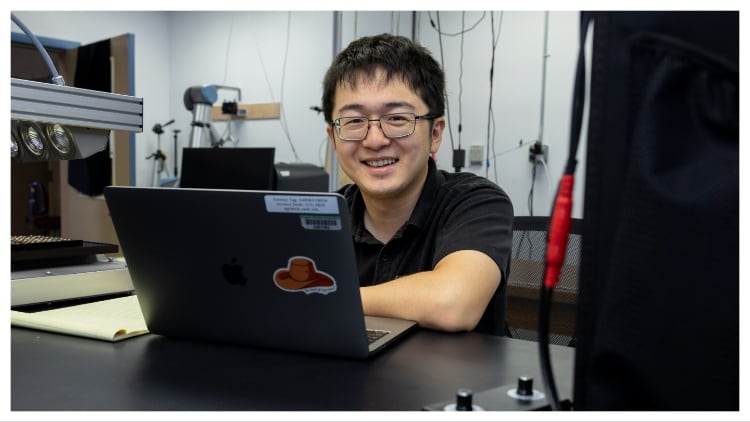

The team from the University of Arkansas System Division of Agriculture explained that while machine vision is being applied in the food sector, current machine-learning-based computer models used for predicting food quality are not as consistent as a human.

The question this study asks is: Is human perception reliable enough to train models, especially when factors such as clever illumination come into play?

Most algorithms are based on human-labelled observations or simple colour information, with the researchers claiming no previous studies have considered the effect lighting can have on human perception and how these biases can subsequently affect vision model training for food quality inspection.

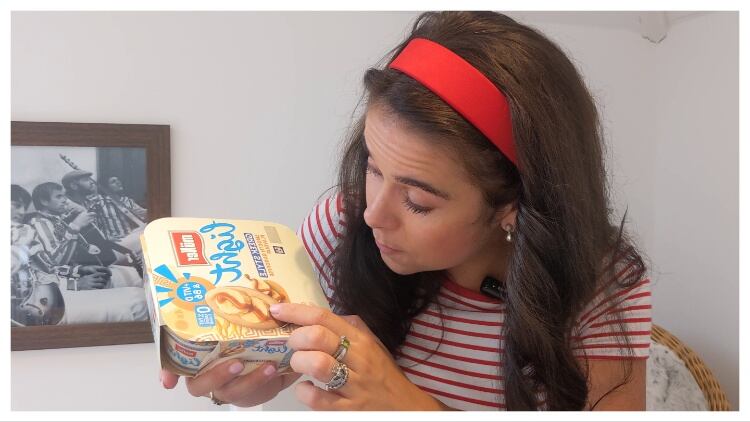

To plug this gap, the researchers showed digital images of lettuce samples captured under different illumination conditions.

The lettuces were photographed over the course of eight days to provide different levels of browning and taken under different lighting brightness and colour temperatures, ranging from a blueish, cool tone to an orangey, warm tone, to obtain a dataset of 675 images.

Over the course of five consecutive days, the human panel evaluated 75 randomly chosen images (from the pool of 675) of Romaine lettuce each day. They were asked to rate it on a scale of zero (not fresh) to 100 (extremely fresh).

While purchase intent and overall liking of each image sample were measured, the data was not used for the study.

The 109 study participants were from a wide range of incomes and age groups, and none had colour blindness or vision problems.

Among the entire group, 89 completed every sensory task involved in the study. Those who did not complete all the sessions were not included in the following analysis.

Several well-established machine learning models were applied to evaluate the same images as the human panel. Different neural network models used the sample images as inputs and were trained to predict the corresponding average human grading to better mimic human perception.

This data was then used to teach the machine how the human perceptions altered and highlight the differences between the panellists’ perceptions.

The study showed that computer prediction errors can be decreased by about 20% using data from human perceptions of photos under different lighting conditions.

When compared to an ‘established model’ that trained a computer using pictures without human perception variability, the new approach proved to be more effective.

The team believe their findings could be used in the future to further improve machine-based vision within the quality control environment and even to create an app for consumer use in the grocery setting to help them pick out their fresh produce.

In other news, Diageo has announced a new global luxury division. Find out more here.